How Is Clean Step In Etl Done Example

What is ETL?

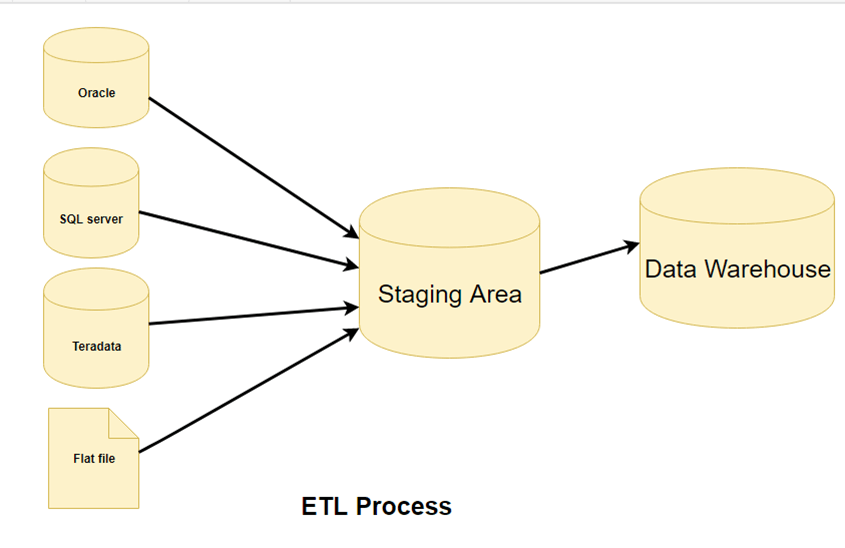

ETL is a process that extracts the data from unlike source systems, so transforms the information (similar applying calculations, concatenations, etc.) and finally loads the information into the Data Warehouse system. Full grade of ETL is Extract, Transform and Load.

It'southward tempting to think a creating a Data warehouse is simply extracting data from multiple sources and loading into database of a Information warehouse. This is far from the truth and requires a circuitous ETL process. The ETL process requires active inputs from various stakeholders including developers, analysts, testers, top executives and is technically challenging.

In gild to maintain its value as a tool for decision-makers, Data warehouse system needs to change with concern changes. ETL is a recurring activity (daily, weekly, monthly) of a Data warehouse organization and needs to be agile, automatic, and well documented.

In this ETL tutorial, you volition acquire-

- What is ETL?

- Why do you need ETL?

- ETL Process in Data Warehouses

- Step i) Extraction

- Step 2) Transformation

- Footstep 3) Loading

- ETL Tools

- All-time practices ETL process

Why practice you lot need ETL?

There are many reasons for adopting ETL in the organization:

- It helps companies to analyze their business data for taking critical business decisions.

- Transactional databases cannot answer complex business questions that can exist answered by ETL example.

- A Data Warehouse provides a common data repository

- ETL provides a method of moving the data from various sources into a data warehouse.

- Equally data sources change, the Data Warehouse volition automatically update.

- Well-designed and documented ETL organization is almost essential to the success of a Data Warehouse projection.

- Let verification of data transformation, aggregation and calculations rules.

- ETL process allows sample data comparing betwixt the source and the target system.

- ETL process tin can perform circuitous transformations and requires the extra expanse to store the data.

- ETL helps to Migrate information into a Information Warehouse. Convert to the various formats and types to adhere to i consistent system.

- ETL is a predefined process for accessing and manipulating source information into the target database.

- ETL in data warehouse offers deep historical context for the business concern.

- It helps to improve productivity because it codifies and reuses without a demand for technical skills.

ETL Process in Data Warehouses

ETL is a iii-step process

Stride 1) Extraction

In this step of ETL compages, data is extracted from the source system into the staging expanse. Transformations if any are washed in staging area so that performance of source organization in not degraded. Also, if corrupted data is copied directly from the source into Data warehouse database, rollback will be a challenge. Staging area gives an opportunity to validate extracted data earlier it moves into the Data warehouse.

Data warehouse needs to integrate systems that accept different

DBMS, Hardware, Operating Systems and Advice Protocols. Sources could include legacy applications like Mainframes, customized applications, Indicate of contact devices like ATM, Call switches, text files, spreadsheets, ERP, information from vendors, partners amongst others.

Hence 1 needs a logical information map before data is extracted and loaded physically. This information map describes the relationship between sources and target information.

Three Data Extraction methods:

- Full Extraction

- Fractional Extraction- without update notification.

- Partial Extraction- with update notification

Irrespective of the method used, extraction should not touch performance and response time of the source systems. These source systems are live production databases. Any slow down or locking could outcome company'south bottom line.

Some validations are done during Extraction:

- Reconcile records with the source data

- Make sure that no spam/unwanted data loaded

- Data type check

- Remove all types of duplicate/fragmented data

- Cheque whether all the keys are in identify or non

Step 2) Transformation

Information extracted from source server is raw and not usable in its original form. Therefore it needs to exist cleansed, mapped and transformed. In fact, this is the key step where ETL process adds value and changes data such that insightful BI reports tin be generated.

It is one of the of import ETL concepts where you lot apply a set up of functions on extracted information. Information that does non require any transformation is called equally direct motion or pass through data.

In transformation pace, you can perform customized operations on data. For instance, if the user wants sum-of-sales acquirement which is not in the database. Or if the first name and the last proper noun in a table is in different columns. It is possible to concatenate them before loading.

Following are Information Integrity Problems:

- Different spelling of the aforementioned person similar Jon, John, etc.

- There are multiple ways to denote company proper noun similar Google, Google Inc.

- Utilise of different names similar Cleaveland, Cleveland.

- At that place may be a case that different business relationship numbers are generated by diverse applications for the same customer.

- In some information required files remains blank

- Invalid product collected at POS as manual entry can atomic number 82 to mistakes.

Validations are done during this stage

- Filtering – Select merely certain columns to load

- Using rules and lookup tables for Data standardization

- Character Gear up Conversion and encoding handling

- Conversion of Units of Measurements like Date Time Conversion, currency conversions, numerical conversions, etc.

- Data threshold validation check. For instance, age cannot exist more than 2 digits.

- Data menstruation validation from the staging area to the intermediate tables.

- Required fields should not be left bare.

- Cleaning ( for example, mapping Nix to 0 or Gender Male to "Yard" and Female person to "F" etc.)

- Dissever a cavalcade into multiples and merging multiple columns into a unmarried column.

- Transposing rows and columns,

- Apply lookups to merge data

- Using any complex data validation (e.yard., if the first two columns in a row are empty so it automatically reject the row from processing)

Step 3) Loading

Loading information into the target datawarehouse database is the last footstep of the ETL process. In a typical Data warehouse, huge volume of information needs to be loaded in a relatively brusque catamenia (nights). Hence, load process should exist optimized for performance.

In case of load failure, recover mechanisms should be configured to restart from the bespeak of failure without information integrity loss. Data Warehouse admins need to monitor, resume, cancel loads as per prevailing server performance.

Types of Loading:

- Initial Load — populating all the Data Warehouse tables

- Incremental Load — applying ongoing changes as when needed periodically.

- Total Refresh —erasing the contents of i or more tables and reloading with fresh data.

Load verification

- Ensure that the key field data is neither missing nor cipher.

- Examination modeling views based on the target tables.

- Check that combined values and calculated measures.

- Data checks in dimension table likewise as history table.

- Check the BI reports on the loaded fact and dimension table.

ETL Tools

There are many Data Warehousing tools are available in the market. Here, are some about prominent i:

ane. MarkLogic:

MarkLogic is a information warehousing solution which makes information integration easier and faster using an assortment of enterprise features. It tin query different types of data like documents, relationships, and metadata.

https://www.marklogic.com/product/getting-started/

2. Oracle:

Oracle is the industry-leading database. It offers a broad range of choice of Data Warehouse solutions for both on-premises and in the cloud. Information technology helps to optimize customer experiences past increasing operational efficiency.

https://world wide web.oracle.com/alphabetize.html

three. Amazon RedShift:

Amazon Redshift is Datawarehouse tool. It is a simple and price-constructive tool to analyze all types of information using standard SQL and existing BI tools. Information technology as well allows running complex queries against petabytes of structured information.

https://aws.amazon.com/redshift/?nc2=h_m1

Here is a complete list of useful Data warehouse Tools.

Best practices ETL process

Following are the best practices for ETL Process steps:

Never try to cleanse all the data:

Every system would like to have all the data clean, merely virtually of them are not ready to pay to expect or not ready to look. To clean it all would simply take also long, and then it is meliorate not to try to cleanse all the data.

Never cleanse Anything:

Always plan to clean something considering the biggest reason for building the Data Warehouse is to offer cleaner and more reliable data.

Decide the price of cleansing the data:

Before cleansing all the dirty data, information technology is of import for y'all to determine the cleansing cost for every dirty data chemical element.

To speed up query processing, have auxiliary views and indexes:

To reduce storage costs, store summarized data into disk tapes. As well, the trade-off between the volume of data to be stored and its detailed usage is required. Merchandise-off at the level of granularity of data to decrease the storage costs.

Summary:

- ETLstands for Extract, Transform and Load.

- ETL provides a method of moving the data from diverse sources into a data warehouse.

- In the kickoff pace extraction, information is extracted from the source arrangement into the staging expanse.

- In the transformation step, the data extracted from source is apple-pie and transformed .

- Loading information into the target datawarehouse is the final stride of the ETL process.

Source: https://www.guru99.com/etl-extract-load-process.html

Posted by: jenningsthassences.blogspot.com

0 Response to "How Is Clean Step In Etl Done Example"

Post a Comment